Corecore-Committed to computer vision technology in autonomous driving

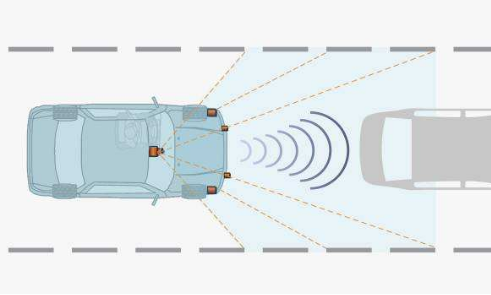

Vision is an important functional means for humans to observe and understand the world. About 75% of the information that humans obtain from the outside world comes from the visual system. In particular, 90% of the information needed by the driver for driving comes from vision. Among the current environmental perception methods used in vehicle assisted driving, visual sensors can obtain higher, more accurate, and richer road structure environmental information than ultrasound and lidar. In the field of autonomous driving, a prerequisite problem is road condition recognition and the distance and speed detection of vehicles and obstacles. Only when this problem is solved can it be possible to control car driving.

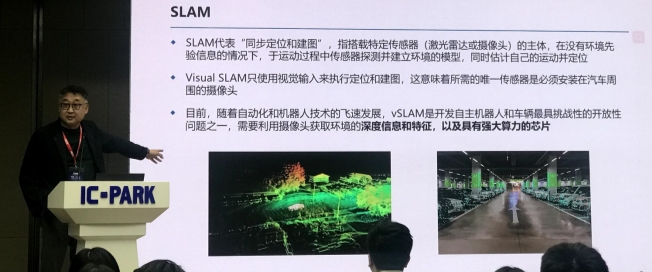

At the 4th "Core Motion Beijing" Zhongguancun IC Industry Forum, Beijing Corecore Technology Co., Ltd. CEO Li Shenwei introduced the company's technical direction. Corecore was jointly initiated by Li Shenwei, a senior veteran in the semiconductor industry, and BAIC, Imagination, and Cuiwei. As the first automotive chip design company jointly established by a Chinese state-owned vehicle company and an international chip giant, Corecore will focus on the research and development of application processors for autonomous driving and chips for smart cockpits, represented by BAIC Group Of domestic car companies provide advanced solutions in the field of automotive chips. Especially in terms of positioning technology, Corecore prefers SLAM technology.

SLAM-a popular technology in autonomous driving navigation

Li Shenwei mentioned that in autonomous driving, 3D positioning technology is very important. Just imagine, if there is no GPS, how should the car be positioned? The core technology of SLAM is to allow you to perform 3D modeling while driving, so as to judge objects. This is the focus of the future investment of Corecore.

SLAM is the abbreviation of Simultaneous Localization And Mapping, which was first proposed by Hugh Durrant-Whyte and John J. Leonard in 1988. SLAM is not so much an algorithm as it is a more appropriate concept. It is defined as a solution to "the robot starts from an unknown location in an unknown environment and repetitively observes map features (such as wall corners, pillars, etc.) during movement. Locate one's own position and posture, and then build a map incrementally according to one's own position, so as to achieve the general term of the problem method of simultaneous positioning and map construction.

The core steps of SLAM technology, in general, SLAM includes three processes: perception, positioning, and mapping.

Perception-The robot can obtain information about the surrounding environment through sensors.

Positioning-through the current and historical information obtained by the sensor, infer its own position and posture.

Mapping-based on one's own posture and the information obtained by the sensor, depict the appearance of one's own environment.

Binocular stereo vision, better realization under strong light

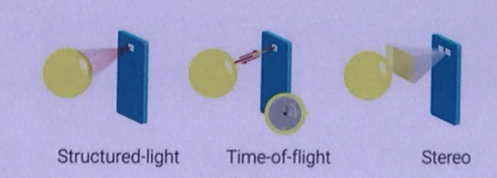

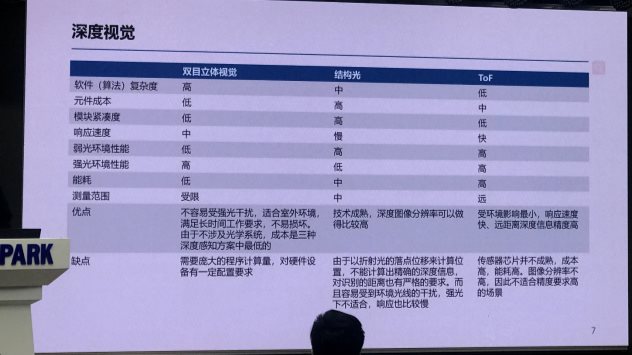

In addition, in the current machine vision, the three mainstream technologies of structured light, TOF, and binocular stereo vision:

TOF: Simply put, the distance is calculated by the flight time of light.

Structured light: Through an infrared laser, light with certain structural characteristics is projected onto the object to be photographed, and then a special infrared camera collects the reflected structured light pattern, and calculates the depth information according to the principle of triangulation.

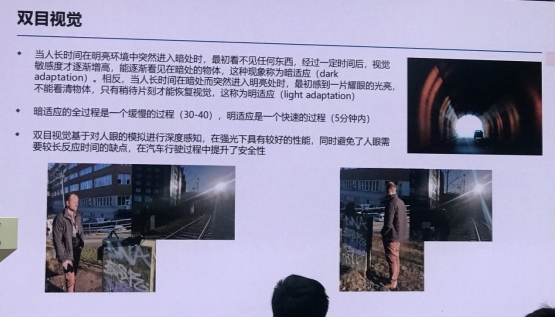

Binocular stereo vision: The principle is similar to that of a Leica camera, with some parallax compensation for short and long distances. Corecore is very optimistic about this technology because it is not easy to be interfered by strong light, and the cost is low and there is no patent trouble. Li Shenwei explained that people will have a process of adapting to the environment from bright to total darkness. People's process in this respect is very slow. If from dark to bright, this adaptation is relatively fast. For TOF and structured light, there are some shortcomings. The recent collision of Tesla with a white truck lying on its side is a good example. Machine vision does not calculate that there is an object ahead.

In the process of developing the chip, Corecore plans to use the advantages of binocular stereo vision to design, and then match the latest GPU and artificial intelligence accelerator to continue to promote the development of ADAS and intelligent driving perception chips.

It is reported that the smart cockpit and L2-L4 multi-level environmental perception solutions developed by Corecore are expected to achieve successful tapeout and mass production in 2021 and 2022, respectively. At present, autonomous smart driving and smart cockpit chips with independent IP are still scarce, and breakthroughs in related fields are of great significance to the development of the domestic smart car industry, and the market space is broad.